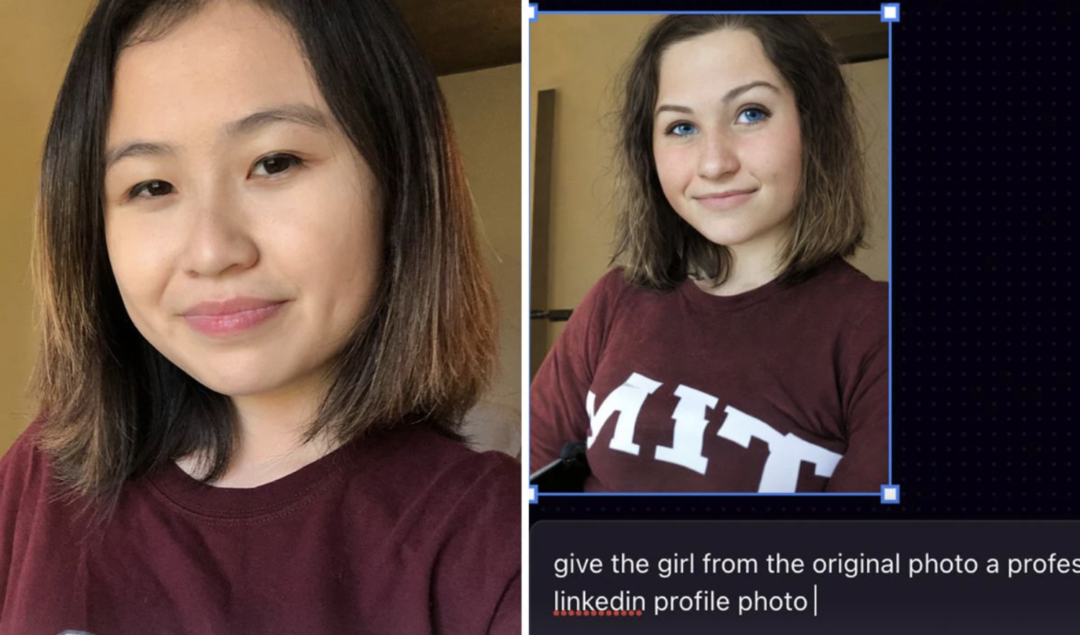

MIT Graduate Asked AI App To Make Her Headshot More Professional, It Whitewashed Her Instead

An AI image creator, Playground AI, gave an Asian MIT graduate blue eyes and lighter skin when she asked it to turn her photo into a professional LinkedIn headshot.

Rona Wang, who had majored in computer science at MIT, took to Twitter to share her surprise, adding to online debates about racial biases in generative AI.

What happened?

The Boston Globe reported that Wang uploaded an image of herself smiling and wearing a red MIT shirt to the platform, asking it to turn the image into a “professional” LinkedIn profile photo.

The tool kept the background of her photo, along with the jumper however, it generated changes to her facial appearance.

The AI generated-image featured blue eyes, instead of her natural brown eyes and made Wang appear Caucasian, with lighter skin and freckles.

She tweeted the image and it gained traction online, sparking conversations and debate among users.

“The response showed me that while AI has amazing capabilities, it is not built for everyone,” Wang wrote in a LinkedIn post. “While I can go without a new LinkedIn profile photo, similarly biased algorithms might be used to perpetuate systemic inequalities”

Playground AI’s response

Soon after the post circulated online, Playground AI founder, Suhail Doshi replied directly to Wang on Twitter.

“The models aren’t instructable like that, so it’ll pick any generic thing based on the prompt. Unfortunately, they’re not smart enough,” he wrote.

Hetold Wang that Playground AI needs more detailed prompts than generative AI tools like ChatGPT.

“For what it’s worth, we’re quite displeased with this and hope to solve it.”

A reflection of society?

Wang’s post got mixed reactions on social media as it circulated, with one user saying, “AI reflects the data it’s trained on, which reflects society. It is American society that believes only white people are professional.”

“This image is quite literally a mathematical representation of the delta between our personhood and American society’s requirements for treating us like people.”

Other users disagreed, saying that considering how bad the prompt was, the AI did a good job, as Generative AI is not the same as LLM and doesn’t understand the context in the same way.

Read: Canva’s AI Image Tool Flags Black Hairstyle As Unsafe

Bloomberg’s recent analysis of Stable Diffusion’s outputs produced disturbing but unsurprising results: the AI-image generator model amplified race and gender stereotypes.

The problem appears to be widespread. Buzzfeed recently published a now-deleted article on what AI thinks Barbies would look like from different countries worldwide. The results contained extreme forms of representational bias, including colourist and racist depictions. Similarly, several Black artists have raised concerns about inaccurate and distorted AI-generated images of Black people.

As Wang notes, “It’s important to assess and mitigate bias that infiltrates technology.”