Canva’s AI Image Tool Flags Black Hairstyle As Unsafe

The regulation and ethics of AI image generators have been a topic of conversation for several years, with EU lawmakers considering proposals for safeguards to address some of the issues.

In March, more than 31,000 people signed an open letter calling for a six-month pause in AI research and development to answer questions about regulation and ethics.

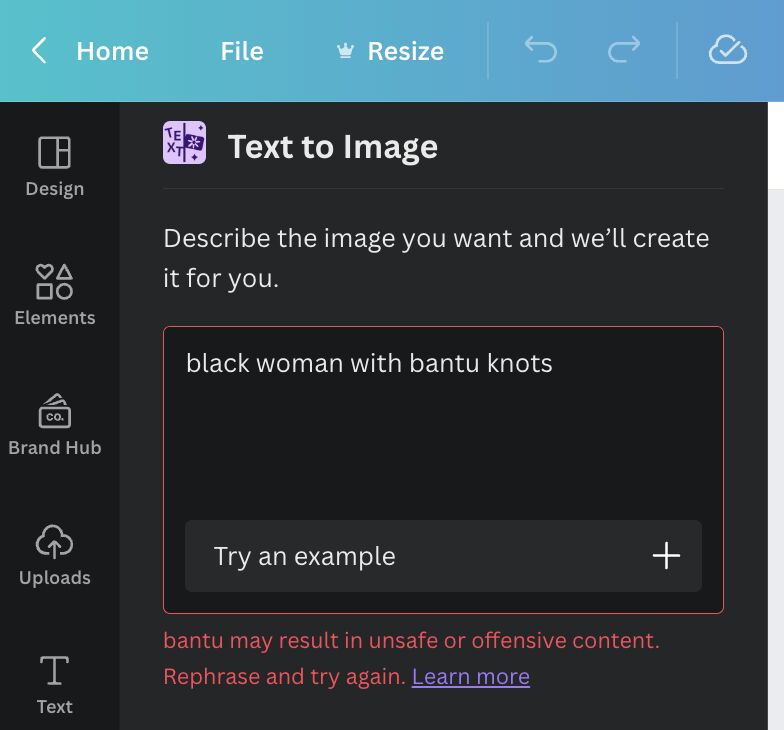

Recently, the free-to-use graphic design tool Canva came under fire after DEI Thought Partner Adriele Parker prompted its text-to-image app to generate a Black woman with Bantu knots.

An error appeared and told her, “Bantu may result in unsafe or offensive content.”

In a LinkedIn post, Parker wrote, “Tell me your AI team doesn’t have any Black women without telling me your AI team doesn’t have any Black women—my goodness. Canva, if you need a DEI consultant, give me a shout. I’ve been a fan of your platform for some time, but this is not it. Be the change. Please.”

Canva replied, “We appreciate you bringing this to our attention and apologize for any inconvenience.”

“We’ve built multiple safety features into our Magic AI-powered products, like an automated content moderation system, to ensure the results are safe and responsible.”

However, some users wanted more from this response after one woman searched Canva’s photo library for “Black woman with Bantu knots,” and this time images of a noose appeared amongst a select few images of Black women with afro hair.

Canva’s Trust and Safety Operations Specialist Joel Kalmanowicz commented under the post, saying they have fixed the problem and raised concerns with the team.

“Of course, we do strive for simply not having offensive representations. That isn’t easy to do at scale, and feedback like yours is crucial to helping us find things that slip through the gaps,” he said.

We decided to try the tool for ourselves, and it seems like the problem persists.

The wider issue

It seems the issue isn’t with Canva itself or the other image generator apps; it’s with the representation of the industry itself.

“We are essentially projecting a single worldview out into the world instead of representing diverse kinds of cultures or visual identities,” Sasha Luccioni, a research scientist at an AI startup, told Bloomberg.

Canva’s text-to-image app is powered by Stable Diffusion, the open-source deep-learning image generator that has taken the internet by storm.

Bloomberg’s recent analysis of Stable Diffusion’s outputs produced some disturbing, but unsurprising results: the model amplified race and gender stereotypes.

When the Bloomberg team asked the model to generate images of an individual in a high-paying job, the results were dominated by subjects with lighter skin tones, while prompts like fast food workers and social workers more commonly generated subjects with darker skin tones.

Read: Timnit Gebru On AI Oversight - We Have Food And Drug Agencies, Why Is Tech Any Different?

Some experts in generative AI predict that as much as 90% of internet content could be artificially generated within a few years. Head of AI Products at Canva, Danny Wu, stated that the company’s users had already generated 114 million images using Stable Diffusion.

It comes as no shock that there is a concern surrounding racial biases.

Canva has confirmed it is working on an improved and de-biased version of the Stable Diffusion model, which should deploy in the near future.

Friday, June 16 Update

Canva told POCIT, “I just wanted to flag that our Trust & Safety team has looked further into the Text to Image experience and implemented a fix.”

“Thanks for bringing this to our attention.”