Meta, the tech giant formerly known as Facebook, is under scrutiny for using public Instagram posts to train its generative AI model without notifying users in Latin America, according to Rest of the World. The company’s decision has particularly impacted artists in the region, who rely heavily on social media to showcase their work but cannot opt out of this data usage. Lack of Notification and Opt-Out Options On June 2, many Latin American artists discovered that Meta had not informed them about its plans to use their public posts

Data workers are exposing the severity of exploitation in the tech and AI industry through the Data Workers’ Inquiry. As part of the community-based research project, 15 data workers joined the Distributed AI Research (DAIR) Institute as community researchers to lead their own inquiry in their respective workplaces. Funded by the Distributed Artificial Intelligence Research (DAIR) Institute, Weizenbaum Institute, and Technische Universität Berlin, the project sheds light on labor conditions and widespread practices in the AI industry. The Plight of African Content Moderators Fasica Berhane Gebrekidan, an ex-content moderator for

Meta, the parent company of Facebook and Instagram, has launched an update enabling content creators in Nigeria and Ghana to monetize their content on its platforms. This new policy, which became effective June 27, 2024, marks an important change. Previously, Facebook excluded creators with Nigerian and Ghanaian addresses from monetization unless their page was managed from an eligible country. Expansion of Monetization Opportunities This policy shift follows an announcement by Meta’s President of Global Affairs, Nick Clegg, in March 2024, confirming the rollout of monetization features in June. “Monetization won’t

Meta’s ad algorithms show racial bias by disproportionately steering Black users towards more expensive for-profit colleges, a recent study found. Researchers from Princeton and the University of Southern California have developed “Auditing for Racial Discrimination in the Delivery of Education Ads,” a third-party auditing report to evaluate racial bias in education ads, focusing on platforms like Meta. Algorithm Education Bias This method allows external parties to assess and demonstrate the presence or absence of bias in social media algorithms, an area previously unexplored in education. Prior audits revealed discriminatory practices

Meta recently announced the formation of an AI advisory council, made up entirely of white men, sparking backlash amid the discourse around diversity and inclusion in the tech industry. The Composition And Role Of The AI Council This AI advisory council is distinct from Meta’s board of directors and its Oversight Board, which have more diverse gender and racial representation. Unlike these bodies, the AI council was not elected by shareholders and held no fiduciary duty. Meta told Bloomberg that the council would provide “insights and recommendations on technological advancements,

More than 200 groups, including civil organizations, researchers, and journalists, have written to major tech company CEOs, urging them to take action to ensure truthful online content and safeguard democratic processes. The letter that went out to the CEOs of Discord, Google, Instagram, Meta, TikTok, X, YouTube, Pinterest, Reddit, Snap, Rumble, and Twitch, coincides with upcoming elections in over 60 countries in 2024. The Impact Of Online Discourse On Democracy “Research illustrates that individual users can have an outsized impact on online discourse, which results in real-world harms, such as the rise of extremism and violent attempts to overthrow democratic

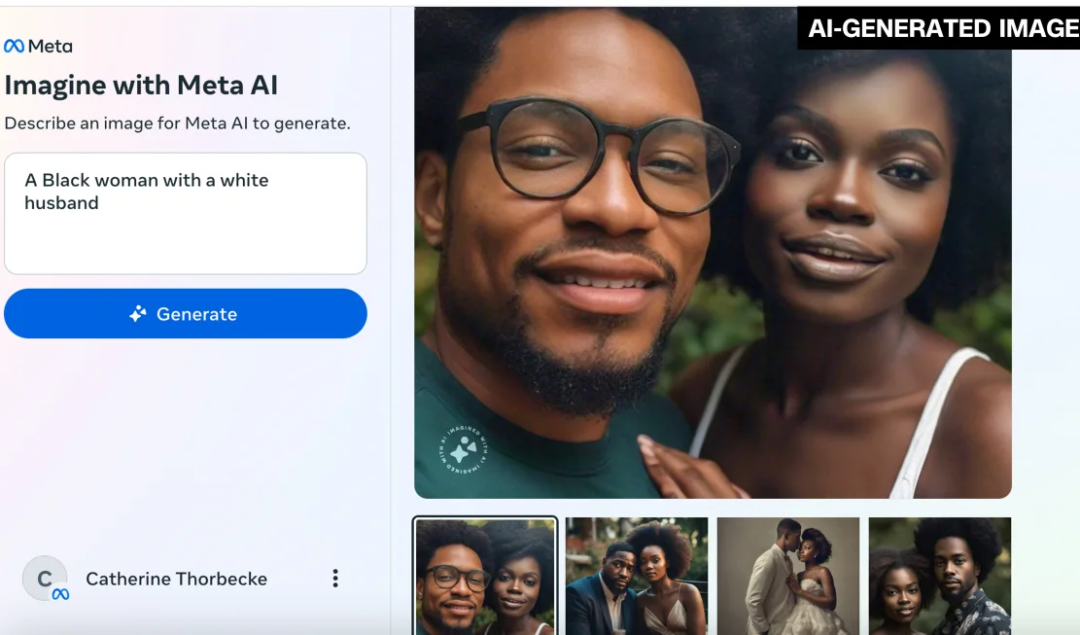

Meta’s AI-powered image generator has recently been scrutinized for its difficulty in generating images of interracial couples and friends. Meta’s AI Fails To Generate Interracial Couples The AI tool, introduced in December, revealed its shortcomings when CNN tested its ability to create pictures of people from different racial backgrounds. The requests for images of interracial relationships consistently resulted in the AI producing images of same-race couples or friends, contradicting the diversity seen in real-world relationships. For instance, a request for a Black woman with a white husband yielded images of Black couples. This pattern was broken

Colorintech and Meta have launched the Spectrum AI Accelerator tailored to harness the potential of diverse UK technology founders. Colorintech is an award-winning nonprofit working towards a more transparent and inclusive tech economy. It is known for its student programs and working with governments, universities, VCs, and technology companies to help close the wealth and opportunity gap for underrepresented individuals. The AI Roadshow The program is a “Roadshow” where the duo will cover three core areas across different stakeholders in the community to make them positively impacted by AI. The Roadshow is a visionary

After making pledges following the murder of George Floyd in 2020, companies such as Google have cut back on Diversity, Equity, and Inclusion (DEI) initiatives and hiring. Following Floyd’s murder in 2020, companies set pledges to prioritize DEI. Between June and August 2020, there was a 55% increase in corporate DEI roles, and leading corporations pledged $12.3 billion to fight racism. Google, in particular, aimed to improve the representation of underrepresented groups in leadership by 30% by 2025 and address representation issues in hiring, retention, and promotions. Over the last year, however,

Kenyan President William Ruto has announced that Meta has agreed to monetize content in Kenya, benefitting the country’s creators. Following a year-long negotiation with the Kenyan government, Facebook and Instagram content creators will begin earning from these Meta platforms. Paying Kenyan Content Creators The President revealed that Meta had run a pilot program with eligible Kenyan content creators. “I have good news for our creatives and those who imagine and produce content through Facebook and Instagram,” Ruto said during the Jamhuri Day celebrations at Uhuru Gardens in Nairobi. “Just yesterday, Meta committed