Radical Technoculture for Racial Equity

In the

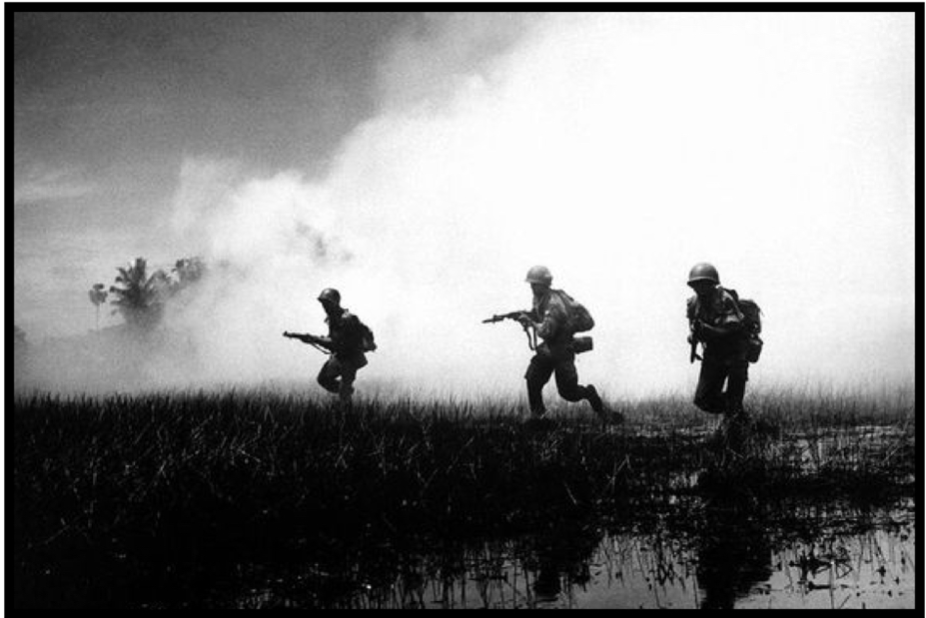

But the research extended beyond ARPA, as the U.S. military was also exploring ways to design poverty and societal despair. “ARPA research carried out by the CIA included assassinating tribal leaders, forcibly relocating villages, and using artificially induced famine to silence and pacify rebellious populations.” [2] Ramparts magazine identified similarities between tactics used in the 1970’s with the Southeast Asians to [Native American] reservations of the 19th century. This became perfect gained intelligence to bring back to the United States, particularly against Black people living in urban areas who were in the midst of steady race riots.[3]

Eventually ARPA was commercialized into the Internet. The U.S. military would fund universities around the country to continue its research, founding the first computer science collegiate studies.[4] Before moving on from this brief history of the internet — It is also important to note that most of the computer scientists and engineers at the early stages of developing the internet were White/European-American males. Their values, Western values, were digitized in our new form of electronic communication — further reinforcing Western values in every engagement with this tool.

The internet was most importantly a design philosophy, prioritizing decentralized configurations for mass communication. But it was also a design decision for the internet of today to be accessed from any and everywhere — as the internet needed to be “as ubiquitous as the American security apparatus that financed its construction,” aka the military.[5]

Counterculture communities saw technology as a potential tool for personal libration and utopic futures. Stewart Brand, creator of the Whole Earth Catalog, is celebrated to have pioneered a relationship between hippie counterculture and human-computer interactive technologies. Steve Jobs is quoted identifying the Catalog as “Google in paperback form, thirty-five years before Google came along.” This made Brand central to the Silicon Valley history.

Though the magazine encouraged and offered visions of a new social order in combat of the Vietnam war, it wasn’t traditionally political.

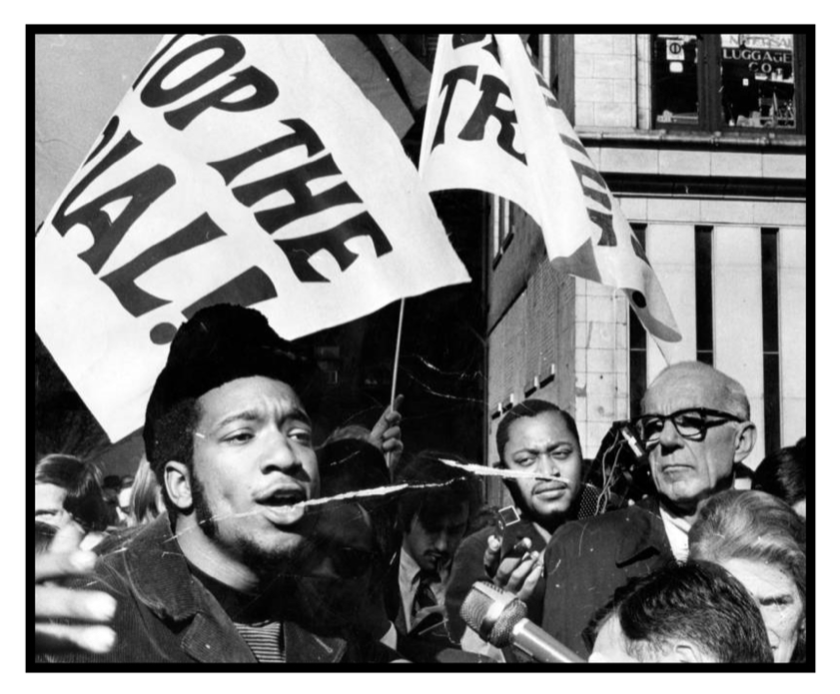

This allowed for great surprise to its readers when Stewart Brand invited the Black Panther Party to guest edit an entire edition of his subsequent magazine, CoEvolution Quarterly. Two entirely different subcultures — both heavily influenced by eastern ideologies. But the hippie counterculture of Whole Earth “vicariously imagined life off the grid, while the Panthers tried to imagine their survival on the grid.” [6]

In June of 1944, the GI Bill was signed into law. An amendment designed to supplement the American economy by awarding great financial relief to War veterans, in the form of accessible mortgages and tuition stipends. This support is also heavily tied to the U.S.’s fear of the 1917 Russian revolution. As “government officials came to believe that communism could be defeated in the U.S. by getting as many white Americans as possible to become homeowners — the idea being that those who owned property would be invested in the capitalist system.”[7]

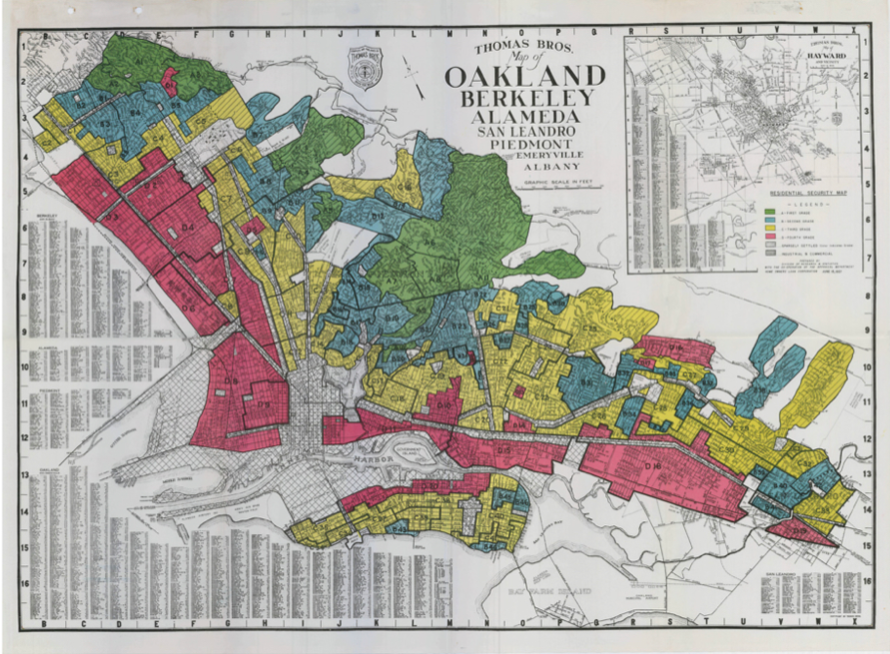

But, the support from the GI Bill was almost exclusively for White war veterans — locking out many Black war veterans from the financial prosperity. Black Panther Party meetings were held in the few Black war veterans’ homes who were able to receive government assistance through the GI bill, but assistance to the Black community was incredibly rare. This lack of assistance was a significant contribution to the racial wealth disparity gap as U.S. citizens greatest wealth driver to this day continues to be real estate. The government supported the discriminatory practices of housing associations such as denying Black families housing mortgages, segregating thriving desegregated communities, and redlining districts. These progressively more segregated Black communities began becoming depleted of basic human resources like fresh produce and accessible healthcare. Black families were forced to live in the outskirts of communities — closer to warehouses and commercial property.[8,9]

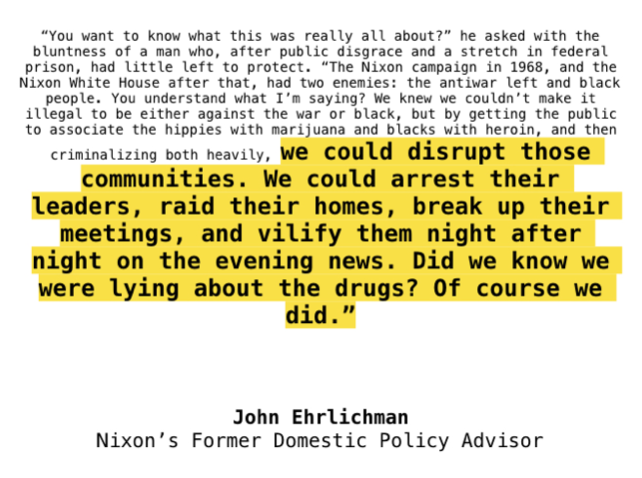

In the mid 1960’s the U.S. is boiling with political dissent. From Civil Rights to the rise of the hippie. Richard Nixon entered office in 1969 and had two enemies, the antiwar left and Black people. And while the 60’s and 70’s were a heavy psychedelic era with drugs and rock n’roll, LSD even being tested and then recreationally used by developers of the internet — Black men and women were being criminalized for their usage of those drugs offshoots, like crack.

Nixon’s former Domestic Policy Advisor bluntly told a reporter that the “War on Drugs” was a ploy to target and destroy the credibility of the Black community.[10] The War on Drugs was a significant contribution to the over policing of Black and Brown communities and heightened prison

industrial complex.

So, in considering what sort of technoculture we’re encountering, we need to look towards what sort of society these digital technologies are being birthed out of. Because “what matters is not the technology itself, but the social or economic system in which is is embedded.”[11] Because “Garbage in means garbage out.”

If the infrastructure of digital technologies is being designed by homogenous cis white men, via a racist and classist society, with oppressive ideologies, it is only possible for the technology to replicate and embed it into today’s algorithms — much to the dismay of first-wave cybernetic thought that technology is self-correcting.

For example, remember the all-white men computer developers of the 60’s and 70’s (and computer science programs of today still often have similar demographics)? This sort of homogenous thought, allows for datasets to be tested on homogenous inputs. And when you’re testing facial recognition technologies on only white men, it’s very easy for services like auto- tagging on web-based photo service, Google Photos, to identify the visuals of a Black people with animals. Here, a friend of mine, Jacky Alcine, virally calls out Google for identifying his darker skinned friend as a gorilla.

If this faulty AI is being deployed for mass consumption with inadequate datasets, what can we imagine for the future of automation in relation to the automobile industry. I’ve had conversations with colleagues describing the rapid innovation, absent of sociological research, on driverless cars. This is awfully concerning considering how faulty camera vision continues to operate on darker skin tones.

And, if driverless cars decide who lives and who dies within a split second reaction to avoid a car-crash, who will the car choose? Especially considering if the car has 5% more difficulty in identifying darker skin tones to lighter skin tones — as found in “Predictive Inequity in Object Detection”, a study coming out of Georgia Tech.

There is also IBM’s facial recognition technologies being used by the NYPD to surveil marginalized communities as an effort to develop technology that lets police search by skin color.[12] Amazon has deployed faulty facial recognition technologies to police departments to be used live within body cameras, continuously searching for matches between civilians police interact with and law enforcement databases of alleged criminals. With these technologies obviously not reliable, this sort of practice can directly affect the citizen’s ability to walk as a free man, or not.

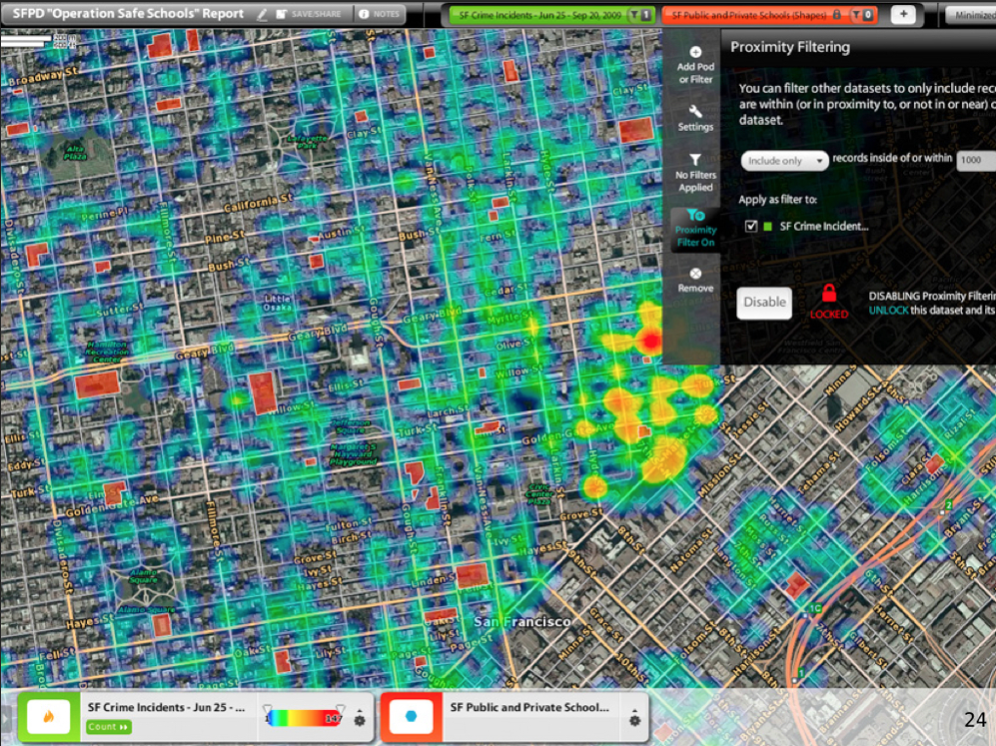

And, remember the war on drugs? Well, the over policing of Black and Brown communities became input for databases on community criminal activity. With budget cuts of municipalities all across the country, police departments are hiring less and purchasing predictive policing softwares for attempted efficiency in being in the right place at the right time to “reduce” criminal activity. So, garbage in, garbage out. Racist police practices are being embedded into criminal-identifying services, replicating the analog racist practices to virtual and ever-widening domains. For one, the algorithms are rendered through machine learning technologies — making the procedures both proprietary and a mystery to even the creators of these technologies. The developers claim to not be liable to release the inner workings of these predictive services because they’re protected by IP. But, the developers, are also unsure how the predictive policing softwares are coming to their results because with the form of artificial intelligence being used, it is drastically near impossible to understand its inner-workings.

Another faulty AI services, COMPAS, is a risk-assessment algorithm to assess potential recidivism risk.[13] In this slide we can observe wildly unequal outcomes to two different incarcerated citizens. Reminding us, garbage in — garbage out. And a dangerously common mindset within technological innovation is that there is a sort of dichotomy between computational advancement and the humanities.

Its moments like these, when we’re reminded of how societal issues like redlining are being naively embedded into algorithms — do we also remember how vital close collaboration between sociologists and technologists are. We previously examined how redlining pushed Black communities to the remote outskirts of areas.

When Amazon first announced it’s same-day delivery service, these same segregation strategies lended themselves well to the exclusion of predominately Black and Brown being serviced by amazon.[14] Including Roxbury in Boston — which is in the direct center of all the other districts within Boston that same-day delivery services catered to. Amazon was quick to apologize and admit that it was a blindspot in their algorithm, deeming the fault a result of inconvenience in reaching those areas. But had there not been this false sense of dichotomy between the humanities and the technologists, these sorts of grave mishaps could have been avoided.

So, in what ways should we be mindful of these racist algorithms virtually perpetuating into the future? I’m personally worried about a few different forms. For instance, what does a workers union look like in a shared economy with peer to peer based apps like Uber and Lyft? Where drivers have reported that “Uber changes its commission rates at a moment’s notice, cutting into drivers’ incomes.” [15]

What does it mean for developing countries, like Cuba, when their governments say yes to U.S. tech giants like Google to develop their internet infrastructures.[16]

Especially considering how similar to the Vietnam War, the potential next world war is predicted to be founded in cyber warfare. Countries like Cuba, will then be at the mercy of U.S. tech giants, who now have instantaneous access to vital resources like water and power supply — via their internet infrastructure.

But I have hope and here’s why: Remember the war on drugs? Well Bail Bloc, a Web application created by the collective Dark Inquiry, mines cryptocurrency and donates the proceeds to free incarcerated people awaiting trial.[17] And predictive policing? That same collective turns racist predictive policing on its head through their app, White Collar Crime Zones — which uses machine learning to predict where financial crimes are most likely to occur in the U.S.[18] And all those White men.

I’m especially thankful of spaces like Afrotectopia, a social institution most popularly experienced in the form of a New Media Arts, Culture and Technology festival that I founded while a graduate student. Where socially conscious minds innovating at the intersections of art, design, technology, Black culture and activism have convened to disseminate research and design more racially equitable futures. Some presenters at Afrotectopia have included:

Stephanie Dinkins, who’s work surrounds AI as it intersects race, gender, aging, and our future histories. Stephanie works with Bina48, one of the world’s most advanced social robots, in exploration of emotion and relationship development with an AI system.

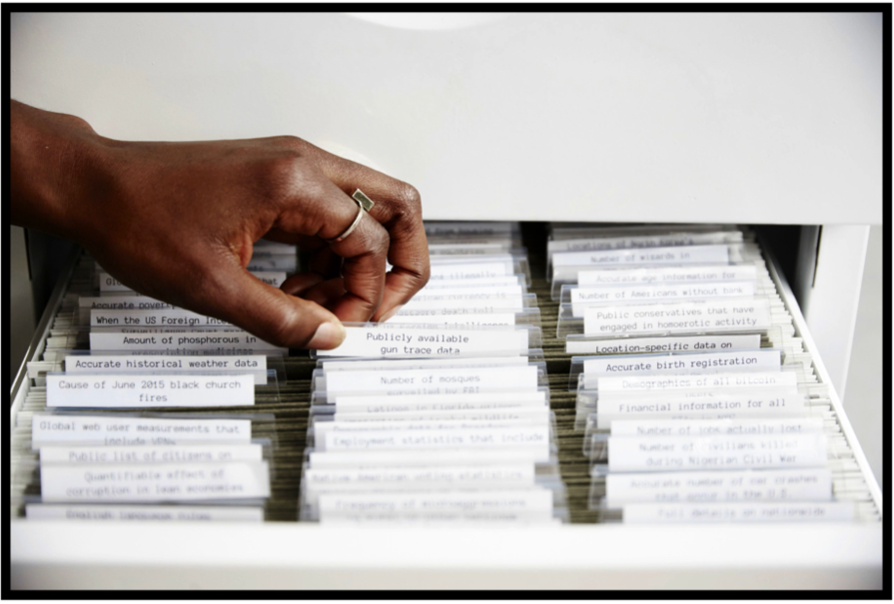

There’s Mimi Onuoha, a Nigerian-American artist and researcher whose work highlights the social relationships and power dynamics behind data collection.

There’s also the Iyapo Repository, by Ayodamola and Salome, which serves as a resource library that houses a collection of digital and physical artifacts created to affirm and project the future of people of African descent. This pictured item is Artifact: 025, a GPS necklace that vibrates as a way to alert its owners when they are at the cross-streets of a police involved shooting in New York City, part of a futuristic reminder that black lives matter.

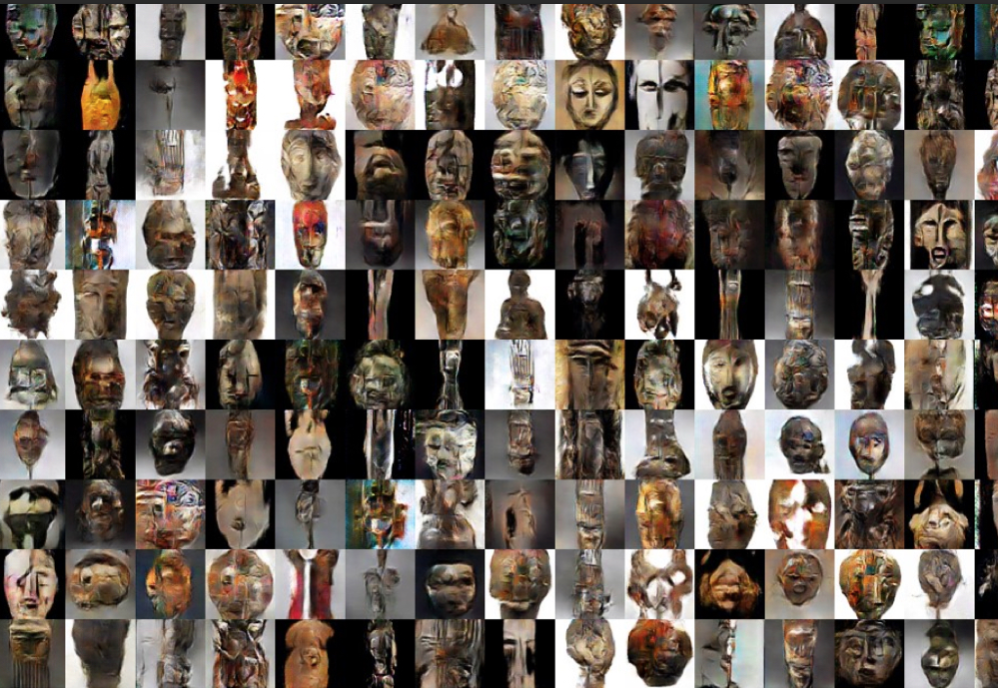

And, Victor Dibia’s work with machine learning to virtually render artificial high resolution African masks through data. This serves as significant importance in considering how much of Black and African American history has been eradicated as we were kidnapped and trafficked to the Americas. The great potentials AI can serve in allowing us to use data to realize forgotten, stolen and no longer available histories.

Just as there exists an FDA to monitor and regulate consumables for proper function. I hope this collection of research emphasizes just how necessary regulation within the tech sphere is.

Afrotectopia has created spaces for the community to collectively ideate just what that might entail, through the development of an Anti- Racist Technoculture manifesto for equitable futures.

I also want to point you towards other institutions who are doing excellent work within this field to push for a more equitable, sustainable and holistic technological practice — like AINow based out of NYU, Algorithmic Justice League and Data and Society.

Cited References:

- Yasha Levine, Surveillance Valley, (Public Affairs, 2018), p. 30

- Ibid. p. 29

- Ibid. p. 30

- Ibid. p. 47–71

- Dag Spicer, Gwen Bell (Computer History Museum), Jan Zimmerman, Jacqueline Boas, Bill Boas (AbbaTech), “Internet History of the 1960s”, Computer History Museum, October 2019

- Andrew Blauvelt, Hippie Modernism: The Struggle For Utopia, (Walker Art Center, 2016), p. 120

- Richard Rothstein, Color of Law, (Liveright Publishing Corporation, 2017), p. 60

- Ibid. p. 17–57

- “A World Without NEPA: Parramore, Orlando”, ProtectNEPA, September 2019

- Tom LoBianco, “Report: Aide says Nixon’s war on drugs targeted blacks, hippies”. CNN, March 24, 2016

- Langdon Winner, Technological Determinism

- George Joseph, Kenneth Lipp, “IBM Used NYPD Surveillance Footage to Develop Technology That Lets Police Search By Skin Color”, TheIntercept_, December 6, 2018

- Karen Hao and Jonathan Stray, “Can you make AI fairer than a judge? Play our courtroom algorithm game”, MIT Technology Review, Oct 17, 2019

- David Ingold and Spencer Soper, “Amazon Doesn’t Consider the Race of its Customers. Should It?”, Bloomberg, April 21, 2016

- Susie Cagle, “To get a fair share, sharing-economy workers must unionize”, Aljazeera America, June 27, 2014

- Nate Swanner, “Obama says Google has a deal in place to bring Wi-Fi and broadband internet access to Cuba”, The Next Web, March 21, 2016

- The New Inquiry, “Bail Bloc 2.0: A cryptocurrency scheme against bail — and ICE”, The New Inquiry, November 2018

- 18. Sam Lavigne, Francis Tseng and Brian Clifton, “White Collar Crime Risk Zones”, The New Inquiry, April 26, 2017