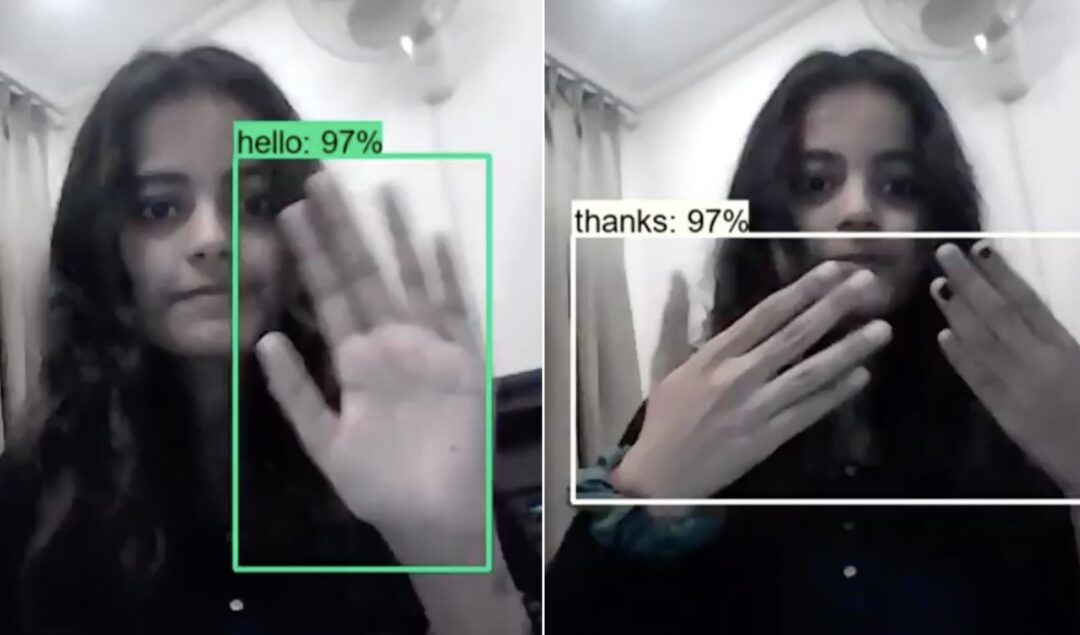

Indian Student Uses AI To Translate ASL In Real Time

Priyanjali Gupta, a fourth-year computer science student specializing in data science at the Vellore Institute of Technology, went viral on LinkedIn after using AI to translate American sign language (ASL).

Gupta got the idea from her mom, who pushed her to put her engineering degree to good use.

“She taunted me,” she told Interesting Engineering. “But it made me contemplate what I could do with my knowledge and skillset.”

“The dataset is made manually by running the Image Collection Python file that collects images from your webcam for or all the mentioned below signs in the American Sign Language: Hello, I Love You, Thank you, Please, Yes, and No,” Gupta shared in her Github post.

Learning from YouTube

Gupta made the model using Tensorflow Object Detection to translate a few American Sign Language (ASL) signs to English using transfer learning from a pre-trained model created by Nicholas Renotte.

Renotte, an Australian accountant turned data scientist and AI specialist, taught a machine learning algorithm to detect, decode and transcribe sign language in real-time.

“I think it’s for the greater good,” he told the Business Insider. “Let’s say you don’t actually speak sign language … we can use models to translate it for you. It’s improving accessibility.”

“Right now, we’ve got a reasonably high level of accuracy: up to 99% for certain signs.”

Renotte told the Insider that he also created a program that can read a person’s sound waves and facial expressions as they speak to determine how the person is feeling.

“This is only scratching the surface of what’s possible,” he said. “There is so much more that’s possible with these technologies. That is why I’m so passionate about it.”

As a largely self-taught coder, Renotte uses his popular YouTube channel to share practical ways for people to get started with data science, machine learning, and deep learning.

Gupta credits Renotte’s YouTube tutorial for encouraging her to use the model on ASL.

“To build a deep learning model solely for sign detection is a really hard problem but not impossible,” Gupta wrote in a LinkedIn comment.

“I’m just an amateur student but I am learning and I believe sooner or later our open source community which is much more experienced and learned than me will find a solution and maybe we can have deep learning models solely for sign languages.”

She added, “Therefore for a small dataset and a small scale individual project I think object detection did just fine. It’s just the idea of inclusion in our diverse society which can be implemented on a small scale.”

More than hand gestures

According to the World Federation of the Deaf, more than 300 sign languages are spoken by more than 70 million deaf people worldwide. In the US, ASL is the third most commonly used language after English and Spanish.

However, sign languages aren’t just about hand gestures – researchers in Ireland estimate that 70% of sign language comes from facial expressions – not to mention body movements. Capturing this aspect of sign language would require computer vison. Also, software needs to be able to translate complete sentences as well as individual signs.

“I think the first step would be to normalize sign languages and other modes of communication”

Also, communication is a two-way street. For sign language translation to be effective, it needs to transcribe sign language into spoken and/or language as well as the other way around.

While AI researchers tackle these challenges, Deaf people continue to face discrimination with regard to sign language use.

As Gupta shares, “I think the first step would be to normalize sign languages and other modes of communication with the specially-abled and work on bridging the communication gap.”