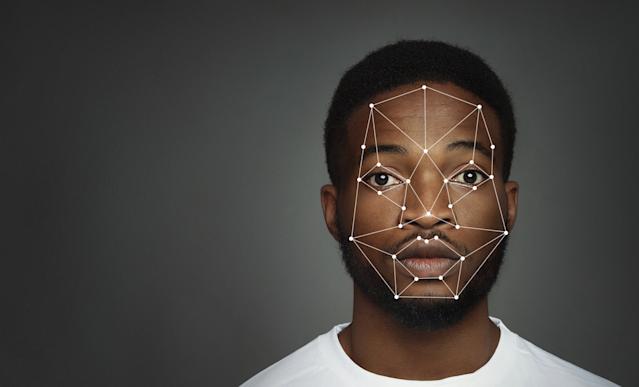

The Mall of America is facing backlash following the implementation of its new facial recognition technology. The use of the technology has raised privacy concerns among lawmakers, civil liberties advocates, and the general public. Concerns Over Privacy and Misuse Minnesota State Senators Eric Lucero (Republican) and Omar Fateh (Democrat) have united in urging the Mall of America to halt its facial recognition operations. “Public policy concerns surrounding privacy rights and facial recognition technologies have yet to be resolved, including the high risks of abuse, data breaches, identity theft, liability and

OpenAI’s ChatGPT chatbot shows racial bias when advising home buyers and renters, Massachusetts Institute of Technology (MIT) research has found. Today’s housing policies are shaped by a long history of discrimination from the government, banks, and private citizens. This history has created racial disparities in credit scores, access to mortgages and rentals, eviction rates, and persistent segregation in US cities. If widely used for housing recommendations, AI models that demonstrate racial bias could potentially worsen residential segregation in these cities. Racially biased housing advice Researcher Eric Liu from MIT examined

The type of advice AI chatbots give people varies based on whether they have Black-sounding names, researchers at Stanford Law School have found. The researchers discovered that chatbots like OpenAI’s ChatGPT and Google AI’s PaLM-2 showed biases based on race and gender when giving advice in a range of scenarios. Chatbots: Biased Advisors? The study “What’s in a Name?” revealed that AI chatbots give less favorable advice to people with names that are typically associated with Black people or women compared to their counterparts. This bias spans across various scenarios such as job

AI’s inability to detect signs of depression in social media posts by Black Americans was revealed in a study published in the Proceedings of the National Academy of Sciences (PNAS). This disparity raises concerns about the implications of using AI in healthcare, especially when these models lack data from diverse racial and ethnic groups. The Study The study, conducted by researchers from Penn’s Perelman School of Medicine and its School of Engineering and Applied Science, employed an “off the shelf” AI tool to analyze language in posts from 868 volunteers. These participants, comprising equal

Amazon-owned Ring will stop allowing police departments to request user doorbell camera footage without a warrant or subpoena following concerns over privacy and racial profiling. Ring’s police partnerships The Ring Doorbell Cam is a wire-free video doorbell that can be installed into people’s front doors and homes. Amazon acquired Ring in 2015 for a reported $1 billion. In 2019, Amazon Ring partnered with police departments nationwide through their Neighbors app. Police could access Ring’s Law Enforcement Neighborhood Portal, which allowed them to view a map of the cameras’ locations and directly

The University of Washington’s recent study on Stable Diffusion, a popular AI image generator, reveals concerning biases in its algorithm. The research, led by doctoral student Sourojit Ghosh and assistant professor Aylin Caliskan, was presented at the 2023 Conference on Empirical Methods in Natural Language Processing and published on the pre-print server arXiv. The Three Key Issues The report picked up on three key issues and concerns surrounding Stable Diffusion, including gender and racial stereotypes, geographic stereotyping, and the sexualization of women of color. Gender and Racial Stereotypes The AI

Despite the controversy surrounding its facial recognition software, Clearview A.I. has found a new home amongst public defenders. The move, described as a “P.R. stunt to try to push back against the negative publicity,” has begun allowing public defenders to access its facial recognition database, which holds more than 20 billion facial images. The controversy explained Earlier this year, the controversial facial recognition program found itself amid legal drama after being fined more than £7.5 million by the U.K.’s privacy watchdog. The fine came after a few senators called on federal agencies

Forehead thermometers are widely used in hospitals and care settings around the world. However, the findings from a recent study suggest that these commonly used thermometers are less accurate in detecting fevers in Black patients than white. Researchers found that 23% of fevers in Black patients went undetected when temporal (forehead) thermometers were used compared to when oral (in mouth) thermometers. As temperature readings are used to determine levels of care, inaccurate readings may lead to missed fevers, delayed diagnoses, and increased mortality in Black patients, contributing to further distrust

Silicon Valley-based startup, Sanas, is working to build real-time voice-altering technology that aims to make international workers sound more “Westernized.” For many years, Black workers have been advised to use their “white voice” when communicating with colleagues or customers in a professional working environment. Additionally, movie adaptations such as “Sorry To Bother You” show that Black workers achieve higher success rates when they choose to emulate a “whiter” voice. Despite the program working to “protect the diverse voice identities of the world,” many wonder whether the product is actively working to remove unconscious