“There Was Definitely No Shock When I Heard About The Incident” – The Fallout after Facebook’s Algorithm Puts ‘Primate’ Label on Video of Black Men

A group of tech and diversity pioneers is calling for robust action to be taken as Facebook’s facial recognition software mistakenly labeled a video featuring Black men as “about primates.”

Marcel Hedman, the founder of A. I group Nural Research, a company that explores how artificial intelligence is tackling global challenges, described the long-standing issue as a “multi-layered” problem that can “definitely be solved.”

Mr. Hedman, 22, based in London, said: “There was definitely no shock when I heard about the Facebook incident, and I think the reason why is because this is something that has definitely happened before.

“But I think it’s something that naturally happens when you look at the way that datasets are constructed, and they are constructed based on data compiled from sources that each person chooses.

“So it’s not surprising at all when those preferences are tailored to the preferences of each person, which again represents natural bias.”

Facebook apologizes after algorithm puts ‘primates’ label on video of black men

His comments relate to Facebook’s recent apology last week as their A. I mistakenly labeled a video featuring Black men as “about Primates.”

The video, published by The Daily Mail earlier this year, showed clips of Black men and police officers, but an automatic prompt asked users if they would like to “keep seeing videos about Primates.”

Although Facebook immediately disabled the program responsible for the “error” – such mistakes are not uncommon in the industry – in fact, they have gone on for at least half a decade.

Back in 2015, Google Photos mistakenly labeled pictures of Black people as “gorillas,” for which Google apologized and tried to fix the error. But media outlet Wired reportedly found that their solution was to block the words “gorilla,” “chimp,” “chimpanzee,” and “monkey” from searches.

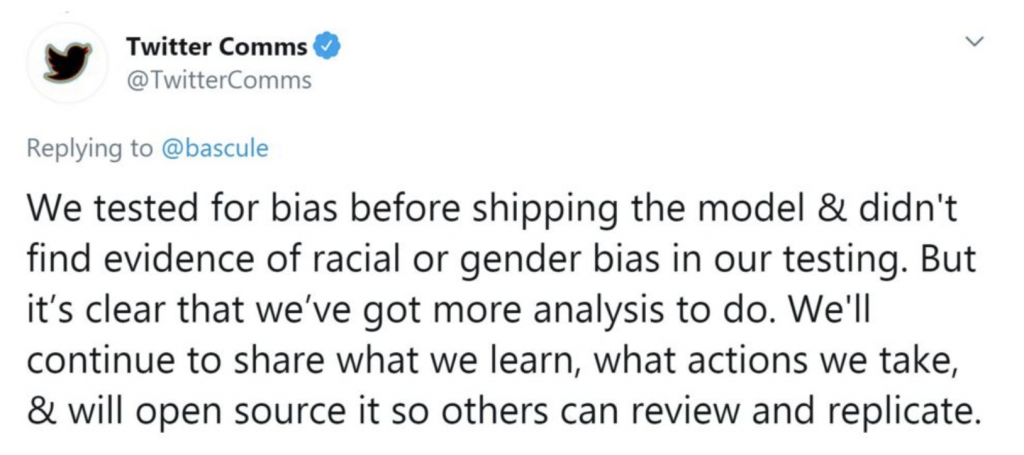

While earlier this year – Twitter came under fire after users noticed that when two photos – one of a black face and the other of a white person – were in the same post, the app often showed only the white face on mobile.

Wider issues

Previous studies have already reported that technology using facial recognition is biased against people of color and has more trouble identifying them.

This has led to incidents where community members have been discriminated against or arrested because of so-called computer errors.

But facial recognition isn’t just used by major tech giants such as Facebook, Google, or Twitter. Police and private companies in the UK and abroad have also been rolling out surveillance cameras with these tool embedded.

Earlier this year, Robert Williams became the first known case of someone being mistakenly arrested in the US because of facial recognition technology that went wrong- this is according to the American Civil Liberties Union.

Mr. Williams, who was arrested after a faulty facial recognition match led to him being accused of a crime he did not commit, has spoken openly about the impact the experience had on him and his family.

Just because we can doesn’t mean we should

Tech entrepreneur Mr. Hedman said he believed the advancement of technology was necessary but urged people to get digital literacy.

“I think we need to ensure that we have a high level of what I’d say is digital literacy, which will basically enable anyone who is receiving data to understand what that data actually means, in context, and where it comes from.

“But the other thing is, and this is from my own standpoint, I believe that it’s super important for us to ask, in which situations should we specifically be using machine learning and AI? And even if we could, we should definitely ask ourselves about where do we want to use and deploy the technology; just because it’s effective doesn’t mean we should use it.”

Mark Johnson from Big Brother Watch, a leading national campaign against facial recognition, echoed these views. He said the incident with Facebook was “inexcusable” and “examples like this demonstrate just one of the fundamental flaws with automated recognition technology, which can have a devastating effect on minority communities when deployed by those in positions of power.”

Access and diversity

But Esther Akpovi, a legal innovation and technology student at the University of Law, believes more needs to be done to avoid further damage to people’s lives.

She believes it starts with access and diversity.

“I feel like more needs to be done to ensure there is access and diversity in the tech industry, specifically in areas such as A.I. At the end of the day, if there isn’t enough diversity, certain things can ultimately go unrecognized or unscrutinized.

“And, of course, with bigger companies and establishments now using A. So I – it’s essential that we actually tackle these issues right now so that it doesn’t lead to long-term impacts.”

But the 21-year-old who works as a venture scout at Ada Ventures added that investors should actively help fund tech businesses that already put diversity and inclusion on the forefront of their products.

“I think that it’s imperative now post Black Lives Matter and all that happened last year that people don’t just talk about doing better for ethnic minority communities, but they actually do the work and part of that are investing in A. I and tech built by them.

“There’s been increased focus on this – which is good. From the likes of the black seed capital and Ada ventures, who invest and support founders, but we have to keep doing this.”