Hispanic and Latine employees feel pressured to conform to mainstream office norms at the expense of their authentic selves and cultural heritage, a new study by Coqual has revealed. Hispanic and Latine professionals represent a rapidly growing demographic in the U.S. workforce, yet they continue to navigate several stereotypes, colorism, and cultural invisibility. The Coqual study used a mixed-method approach, including surveys, focus groups, and expert interviews, involving more than 2,300 full-time professionals across the United States. The pressure to assimilate Key findings indicate that 68% of Hispanic and Latine professionals with sponsors

The type of advice AI chatbots give people varies based on whether they have Black-sounding names, researchers at Stanford Law School have found. The researchers discovered that chatbots like OpenAI’s ChatGPT and Google AI’s PaLM-2 showed biases based on race and gender when giving advice in a range of scenarios. Chatbots: Biased Advisors? The study “What’s in a Name?” revealed that AI chatbots give less favorable advice to people with names that are typically associated with Black people or women compared to their counterparts. This bias spans across various scenarios such as job

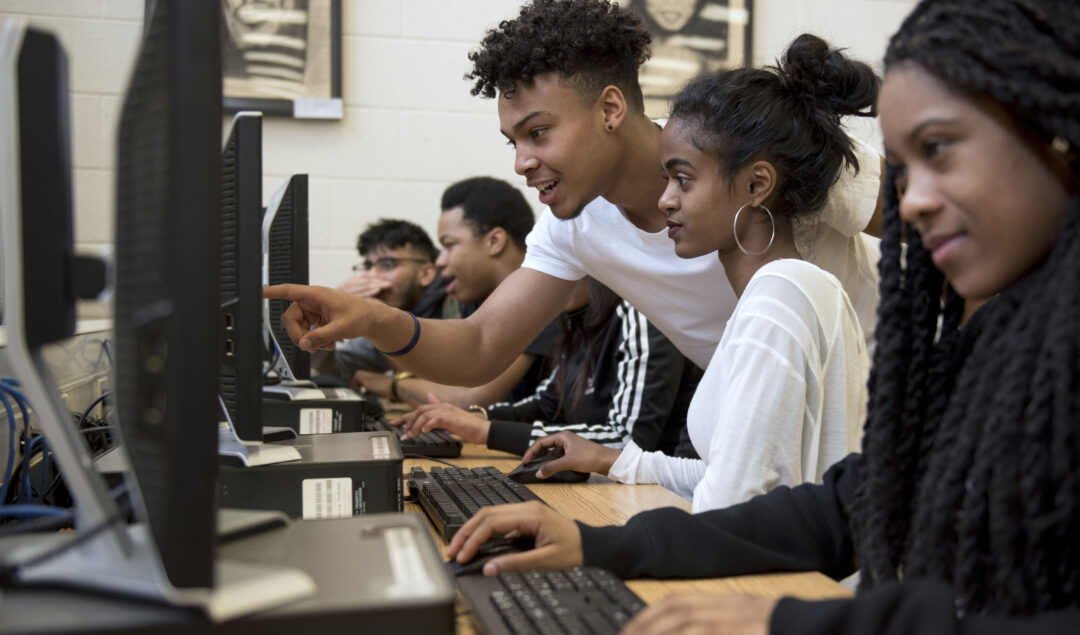

There is a large digital divide affecting low-income and Black or Indigenous majority schools, a recent report by Internet Safety Labs (ISL) has found. Ads and trackers The report “Demographic Analysis of App Safety, Website Safety, and School Technology Behaviors in US K-12 Schools” explores technological disparities in American schools, focusing mainly on marginalized demographics. This research expands on ISL’s previous work on the safety of educational technology across the country and is supported by the Internet Society Foundation. It reveals how schools of different backgrounds use technology and the risks involved. One

Black women in teams with a more significant number of white peers may have worse job outcomes, a new study has found. Elizabeth Linos, the Emma Bloomberg Associate Professor for Public Policy and Management, along with colleagues Sanaz Mobasseri from Boston University and Nina Roussille from MIT, conducted the study. Underrepresentation of People of Color In Leadership According to the study, the underrepresentation of people of color in high-wage jobs, especially leadership positions, still needs to be solved. To better understand and reduce racial inequalities, researchers have often focused on

Some of the most high-profile AI chatbots generate responses that perpetuate false or debunked medical information about Black people, a new study has found. While large language models (LLMs) are being integrated into healthcare systems, these models may advance harmful, inaccurate race-based medicine. Perpetuating debunked race myths A study by some Stanford School of Medicine doctors assessed whether four AI chatbots responded with race-based medicine or misconceptions around race. They looked at OpenAI’s ChatGPT, OpenAI’s GPT-4, Google’s Bard, and Anthropic’s Claude. All four models used debunked race-based information when asked

EXHALE, a well-being app for Black women and women of color, shared its findings from a report that almost 2 in 5 (36%) Black women have left their jobs because they felt unsafe. The State of Self-Care for Black Women report EXHALE recently published its “The State of Self-Care for Black Women” report based on its survey of 1,005 Black women in the U.S. The report states that while diversity, equity and inclusion initiatives are expected in institutions today, fostering safe spaces for Black women requires more specific resources to focus on their

A study assessing the working conditions of Black workers during the pandemic has found that they preferred remote working compared to the office as they felt more valued and supported. Although working from home has its challenges from endless Zoom calls and juggling child care duties – for many Black workers in white-dominated jobs, getting out of the office has resulted in a vast improvement in their employee experience. According to a survey by the Future Forum, a research consortium created by software maker Slack Technologies. The survey of more than