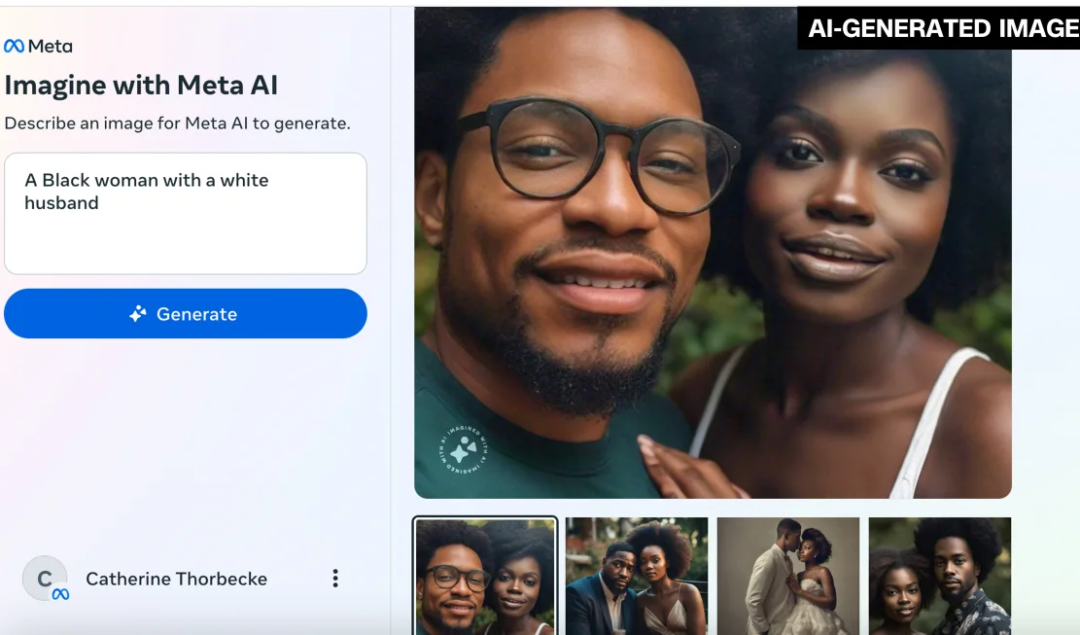

Meta’s AI-powered image generator has recently been scrutinized for its difficulty in generating images of interracial couples and friends. Meta’s AI Fails To Generate Interracial Couples The AI tool, introduced in December, revealed its shortcomings when CNN tested its ability to create pictures of people from different racial backgrounds. The requests for images of interracial relationships consistently resulted in the AI producing images of same-race couples or friends, contradicting the diversity seen in real-world relationships. For instance, a request for a Black woman with a white husband yielded images of Black couples. This pattern was broken

Google’s latest AI tool, Gemini, designed to create diverse images, has been temporarily stopped after sparking controversy over its depiction of historical figures. AI Tool Gemini Gemini was made to create realistic images based on users’ descriptions in a similar manner to OpenAI’s ChatGPT. Like other models, it is trained not to respond to dangerous or hateful prompts and to introduce diversity into its outputs. However, users reported that Gemini, in its attempt to avoid racial bias, often generated images with historically inaccurate representations of gender and ethnicity. For example,

The University of Washington’s recent study on Stable Diffusion, a popular AI image generator, reveals concerning biases in its algorithm. The research, led by doctoral student Sourojit Ghosh and assistant professor Aylin Caliskan, was presented at the 2023 Conference on Empirical Methods in Natural Language Processing and published on the pre-print server arXiv. The Three Key Issues The report picked up on three key issues and concerns surrounding Stable Diffusion, including gender and racial stereotypes, geographic stereotyping, and the sexualization of women of color. Gender and Racial Stereotypes The AI