Controversial Facial Recognition Company Clearview AI Fined In The UK For Illegally Storing Facial Images

Facial recognition company Clearview AI, popular for gathering images from the internet to create a global facial recognition database, has been fined more than £7.5 million by the UK’s privacy watchdog.

The fine comes just months after a trio of senators called on federal agencies to stop using facial recognition technology built by Clearview AI.

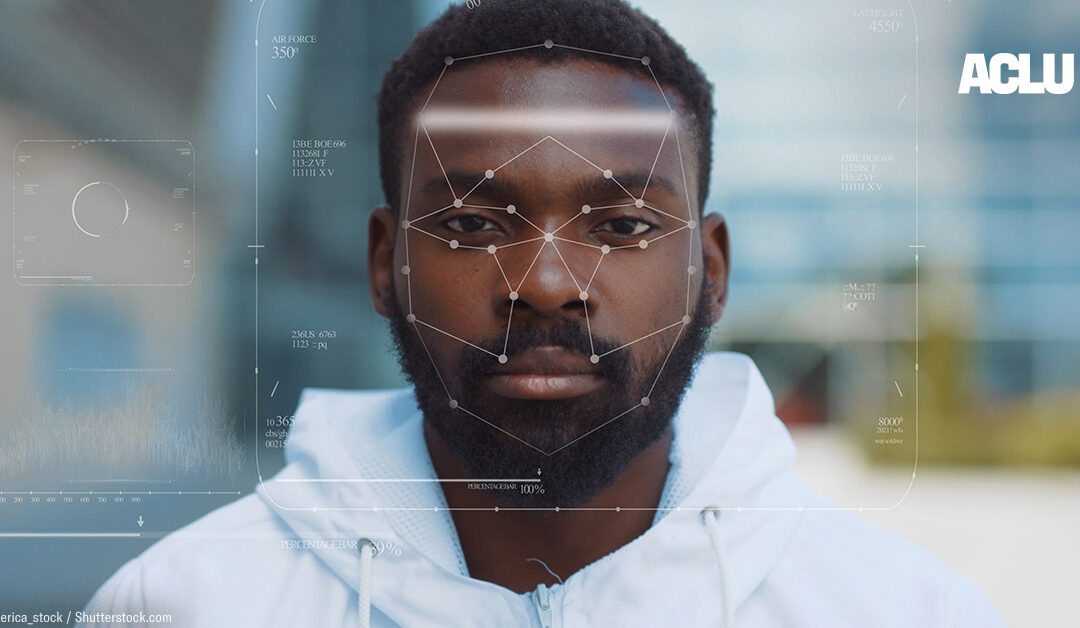

In letters signed by Sens. Edward Markey and Jeffrey Merkley, as well as House Reps Pramila Jayapal and Ayanna Presley, the technology was said to pose “unique threats” to Black communities, other communities of color, and immigrant communities.

How exactly does Clearview work? It takes publicly posted pictures from Facebook, Instagram, and other sources, usually without the knowledge of the platform or any permission.

The Information Commissioner’s Office (ICO) found that the startup, a Peter Thiel-backed surveillance business valued at $130 million, had breached UK data protection laws by failing to use the information of people in the UK in a way that is fair and transparent.

It also failed to have a lawful reason for collecting people’s information and have a process in place to stop the data from being retained indefinitely.

Although Clearview AI Inc no longer offered its services to UK organizations, the company had customers in other countries and it was still using the personal data of UK residents, the BBC reported.

The tech giant has now been told to delete the data of UK residents by the Information Commissioner’s Office (ICO).

According to the ICO, the company has stored more than 20 billion facial images.

In November 2021, the ICO said that the company was facing a fine of up to £17m – almost £10m more than it has now ordered to pay, the broadcaster added.

The fine also comes two years after Clearview pulled out of Canada in 2020 after an investigation into its methods.

Its founder, Ton-That who launched the tech in 2016, previously maintained that the platform intends to only serve government users for law enforcement applications, and to avoid any misuse of the application, he said they will refrain from making a consumer version of Clearview AI.

But campaigners, activists, and some members of the Black community are outraged that governments and law enforcers would even consider using the system.

This is primarily because facial recognition systems have been known to be inaccurate.

Multiple incidents demonstrate that innocent citizens can be drawn into criminal cases if the face recognition algorithms determine they look like someone else. Most often than not – these systems are biased toward race – for instance, in 2019, Michael Oliver, an African American young man, was wrongfully accused and arrested by the Detroit police.

Back in 2020, Robert Williams and Nijeer Parks, also African Americans, were accused and arrested because of an inaccurate match of facial recognition technologies.

For dark-skinned women, the AI technology had an error rate of 34.7%, compared to 0.8% for fair-skinned men, according to a 2018 study by the Massachusetts Institute of Technology.